Dolby Laboratories is an American company specializing in audio processing and encoding technologies, as well as multi-channel surround sound systems.

These days, most users associate the Dolby brand with cinema surround sound systems, but the company began its activities with the development of professional noise reduction systems (UWB).

Dolby Laboratories (Dolby Labs) was founded by American Ray Dolby in 1965. At the time of the creation of his own company, Dolby had several academic titles and a wealth of experience accumulated during his own research and work at Ampex.

Ray Dolby

Initially, Ray Dolby's enterprise was located in London, and in 1976 the headquarters were moved to San Francisco (USA). In the early stages, Dolby employed only a few engineers besides its founder. Today, the staff has grown to several hundred employees (as of 2015, Dolby Labs employed more than 1,800 people).

Dolby NR noise reduction system

In the year the company was founded, Ray Dolby invented a noise reduction system known as “Dolby NR” (a patent was received in the USA a few years later). The first version of UWB - Dolby A - was developed exclusively for professional use. Decca Records was the first to use this UWB. Later, in 1967, Dolby Labs began creating a simplified Dolby B system for home tape recorders.

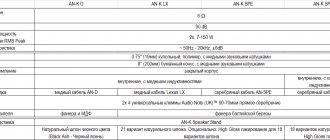

Dolby A

The essence of the development was to minimize background “hissing” noise when playing audio recordings on magnetic tapes. The basis was the companding method: the dynamic range of audio material is deliberately compressed during recording, certain frequencies are recorded at increased volume, and during playback the decoder expands the dynamic range, reducing the amplitude of the desired frequencies and the noise level.

Already in 1968, the Dolby B system was used in KLH reel-to-reel tape recorders. Three years later, the first cassette decks with Dolby B decoders appeared on the market, and mass production of Dolby cassettes began in the USA and Great Britain. By the early 70s, Dolby Labs became the licensor for dozens of companies that used Dolby UWB in their products.

KLH Model 40

The development of technology led to the emergence of more advanced household UWB: the Dolby C system was introduced in 1981, and Dolby S in 1990. These versions of Dolby NR turned out to be more effective, but were not widely used.

The problem with Dolby C was that this format almost necessarily required new specialized equipment. The sound of Dolby C cassettes on tape recorders without special circuits was of poor quality.

The built-in Dolby B decoders were not up to the task. Accordingly, consumers did not want to listen to music in poor quality, and most were not ready to purchase the new generation of players.

Dolby SR

The Dolby S system made it possible to achieve sound quality unprecedented for compact cassettes. In addition, this UWB turned out to be more compatible with Dolby B tape recorders (there was significantly less distortion than in the case of Dolby S). However, Dolby S appeared too late - by that time CDs had already conquered the market.

In addition to the above noise reduction systems, in 1986 Ray Dolby developed the Dolby FM (radio noise reduction) system and the professional Dolby SR system. This format replaced Dolby A. In the early 90s, SR technology was used by thousands of recording studios.

Dolby SR

Dolby SR was also used in cinema. The complexity of this professional method prevented its implementation in ordinary consumer equipment, but for studios, Dolby SR has become the standard.

Solving a problem with the Dolby Labs system.

A little briefly about the essence of the problem itself.

Digital audio encoding, used in the Compact Disc format (16-bit pulse code modulation), made it possible to obtain a dynamic range of 96 dB. This is achieved for each channel by selecting 16-bit words with a frequency of 44.1 kHz for transmission or storage with summation of audio data.

If the number of channels reaches more than two, the volume of information increases very much and with the technologies already created, it is simply impossible to record or transmit information without loss. In this regard, first of all, it was necessary to come up with an effective digital audio encoding algorithm, maximally reducing or compressing (preferably both) information flows, while leaving the original sound quality unaffected. Systems operating according to this algorithm are called “perceptual” (the term “perceptual” in our case should be understood as “based on the characteristics of human auditory perception”).

The very first version of the system, called "Dolby AC-1" , was developed in 1985 for use in digital satellite broadcasting (DBS) of the large Australian Broadcasting Corporation. Of course, thanks to the low price of the decoder, “AC-1” was used in various DBS service functions, such as “cable broadcasting system” and satellite communication networks. The speed of information data transfer was 220-325 kB/s per channel and from the system itself. The data stream information is not the absolute level of the audio signal, but rather its variation from sample to sample - called ADM (Adaptive Delta Modulation).

Next came “Dolby AC-2” : in which the digital encoding algorithm was improved. "AS-2" is needed for professional use and for use when transferring data between recording studios in real time. Dolby AC-2 is also the heart of the Dolby DSTL system, which is used between radio stations for communication. The data transfer rate is 128-192 kB/s per audio channel.

AUDIO CODEC AC3 (5.1) And then the third system, Dolby AC-3, appeared - this is a perceptual digital encoding system created by Dolby Labs. The AC-3 system is based on a combination of psychoacoustic methods in the field of digital sound processing with the latest advances. Also, thanks to this system, the idea appeared in the development of an analogue perceptual sound processing system - “Dolby Professional” and noise reduction systems that are used in everyday life. The results obtained after Dolby Labs experiments with noise reduction systems became the basis for an effective data compression algorithm: the greater the quantization noise, the fewer bits are used to describe the audio signal and vice versa.

The third Dolby AC-3 system.

“Quantization noise” - when encoded in a digital system, it arises due to the error of the analog signal at the moment the real level is determined. Large quantization bit depth reduces errors and various noises; the higher the bit depth, the better.

The algorithm itself is perfectly perceived by human psychoacoustic perception of sound. The audio spectrum of each channel signal is divided into several narrower frequency bands with different widths. The bands are optimized in width, taking into account the different sensitivities of the human ear to sound at different frequencies. Taking this into account and also taking into account the spectral composition with the dynamic range of the phonogram and data are distributed between the bands.

So to speak, the AC-3 processor has a built-in model of human sound perception allowing percent. AS-3, changing the frequency selectivity (as far as time resolution allows) and the amount of data used to describe the audio signal in each band is subjectively enough to reduce noise masking to zero. If the quantization noise in a signal is not able to mask itself, then it has a large bit number (BIT), i.e. – this allows you to reduce or completely eliminate quantization noise, thereby maintaining the original sound quality. Consequently, the “Dolby AC-3” system is essentially one and N number of varieties of “selective noise reduction (SN)” systems (SS consists of different signal processing in different frequency bands. The more well-known selective noise reduction systems are “Dolby C” and "Dolby S").

Data distribution occurs in exactly the same way between channels: the information volume for each channel differs. The algorithm is designed in such a way that the more data is allocated for encoding channels where in reality the sound is more saturated, masking the noise in other channels with strong sound in one channel. In simple words, “AS-3”, in order to reduce the amount of data, simply discards unimportant information or elements of sound that the human ear does not recognize. For each channel, the dependence of the volume of transmitted information and the characteristic features of the signal is the most important distinguishing feature of the algorithm from competing algorithms, ATRAC, etc....).

Dolby Stereo and Dolby Surround

By the mid-70s, Dolby's noise reduction and equalization technologies were already being used not only in music, but also in cinema. 1975 and 1976 were key years for the company - at this time Dolby Labs moved to San Francisco, and the Dolby Stereo theatrical format was born at the same time.

For the first time, a soundtrack with such a sound was used in the 1976 film “A Star is Born,” but the “sound” sensation was caused by the 1977 film “Star Wars. Episode IV: A New Hope."

The Dolby Stereo system in its original form was a four-channel configuration: front left and right channels, channel.

There were two main types of film with Dolby Stereo sound. First, 70 mm film appeared, which made it possible to place up to six tracks of magnetic phonogram. This type of film was subsequently not used often due to its high cost. 35 mm film with an optical soundtrack became widespread.

Tracks were applied to 35 mm film using a special encoding technology, and in cinemas Dolby processors were used to act as decoders. The output was full-fledged 4-channel sound.

The Dolby Surround format became the progenitor of all Dolby home systems. This early, simplified version of Dolby surround sound for home audio systems began to be used in 1982, when the first VCRs of the required level appeared.

As with Dolby Stereo, the decoding technology used a 4-channel soundtrack, but there were only three output channels (left, right and surround, created from the L/R signal).

In 1987, home surround sound was upgraded to Dolby Surrond Pro Logic (or simply "Dolby Pro Logic"). The updated format with a new name also involved the use of encoding in the studio and a processor for subsequent decoding, but the difference was that the Pro Logic system made it possible to obtain not three output channels, but four: left, right, center and surround.

Pro Logic processors, in addition to upmixing the signal, were able to process it for a more naturalistic, accurate sound comparable to a commercial cinema.

In 1989, Ray Dolby and Ioan Allen, vice president and senior engineer at Dolby Labs, received Oscars for their contributions to the development of theatrical sound.

What is Dolby Surround Sound System?

Surround sound system is a technique that basically consists of the ability to improve the sound reproduction quality of a particular sound source, whatever the additional channels from the speakers.

Overall, this system is responsible for projecting sound over a 360-degree radius in a two-dimensional horizontal plane, while the sound received through on-screen channels is only in front of the listener.

All this is characterized by the presentation of a certain position in relation to the listener, which allows sound effects to work correctly . In addition, all this offers a fixed perspective of the sound field to people who adhere to the position already determined by the system. These reasons will increase the perception of sound space through the use of sound location.

This system works for all sounds, be it games, smartphones, portable devices and especially for cinemas , the contender thus has better sound quality and a better prospect for listeners.

Digital Age: Dolby Digital

In 1991, the fully discrete Dolby Digital format (AC-3 codec) appeared. Until the mid-90s, the standard was called “Dolby Stereo Digital”. This technology was based on MDCT conversion and data compression algorithms.

The Dolby Digital (DD) optical soundtrack featured several independent channels (up to six channels in a 5.1 configuration). For the first time, audiences were able to hear DD sound in 1992 at the premiere of the film Batman Returns. The medium was still 35mm film, but the method of applying AC3 optical soundtracks was changed.

Dolby Digital

To capture optical information, a special scanner and processor were created, which transformed the optical soundtrack into a bit stream for subsequent decoding and multi-channel playback.

In 1995, Dolby Digital sound came to home theaters. The first release with digital surround DD audio recording was LaserDisc with the film A Clear and Present Danger.

Dolby Digital Plus

The new format of 1991 was developed in the whole family of DD standards: Dolby Digital EX, Dolby Digital Live, Dolby Digital Plus, Dolby Digital Surround EX, Dolby Digital Recording, Dolby Digital Cinema, Dolby Digital Stereo Creator.

Dolby Labs considers the most significant cinematic achievement of those years to be the Dolby Digital Surround EX format (1999), which became an expanded 6.1-channel version of the usual Dolby Digital. Dolby engineers were prompted to create this standard by the film industry, which needed systems capable of creating even more “three-dimensional” sound effects.

Dolby Digital Surround EX

Director George Lucas took part in the development of Dolby Digital Surround EX. In the movie “Star Wars. Episode I: The Phantom Menace" standard was used for the first time.

This format was not widespread in the domestic sector (home AV systems were in most cases equipped with Dolby Digital and, later, Dolby Digital Plus decoders), but at one time a number of DVD releases were released in the Star Wars universe with Surround EX sound.

Among the important events of the 90s that went down in the history of Dolby Laboratories was an award from the Academy of Motion Picture Arts and Sciences (AMPAS) for the creation of the Dolby Digital format. Also for Dolby Labs, the first live HDTV broadcast (1998) with Dolby Digital 5.1 sound is considered a historical event.

2000s: development of Pro Logic and emergence of Dolby True HD

In the first half of the new decade, Dolby Labs focused on improving channel upscaling algorithms. This is how Pro Logic II (DPL II) and Pro Logic IIx (DPL IIx) were born. The essence of both systems was to convert a two-channel signal into a multi-channel one.

Pro Logic IIz

DPL II technology (2000) made it possible to obtain 5.1 sound from a stereo signal. More advanced DPL IIx technology (2002) converted stereo into 5.1, and full Surround 5.1 into 7.1 sound. Both algorithms supported special modes: “cinema”, “music”, “games”, etc.

In 2009, another version of Pro Logic appeared - IIz. This format had the ability to upmix stereo and 5.1/7.1 surround sound up to a 9.1-channel system.

In February 2005, Dolby Laboratories became a publicly traded company. The company's shares became available on the New York Stock Exchange under the trading symbol DLB. Also in 2005, the company's engineers presented the final versions of the Dolby Digital Plus and Dolby TrueHD surround formats.

DD Plus was based on EAC3 (Enhanced AC-3) audio tracks with increased bitrate and, accordingly, the final sound quality. The format was made backward compatible with regular Dolby Digital, so previous generations of home processors could handle the DD Plus signal. Sound tracks in this 7.1-channel format have been used for Blu-Ray discs since 2006.

Dolby TrueHD

Dolby TrueHD was Dolby Labs' first lossless audio format (using Meridian's MLP algorithm). This standard received support for quality up to 24 bit/192 kHz with a bitrate of up to 18 Mbit/s.

Most Dolby TrueHD content is still created with six or eight channel soundtracks, but the format supports up to 16 channels. Blu-ray movies use 5.1- or 7.1-channel Dolby TrueHD soundtracks.

The second half of the 2000s became a period of active development for Dolby Labs from a commercial point of view. The company participated in international exhibitions, introduced new technologies into partner products and opened new offices in Asia.

Dolby Surround 7.1

In 2010, Dolby Surround 7.1 sound was introduced to commercial cinemas. The basis of this 8-channel format was Dolby Digital 5.1. The first film in the new format was Toy Story 3: The Great Escape from Disney and Pixar.

These days, almost all movies in theaters have Dolby Surround 7.1, but most theaters play standard Dolby Digital 5.1 audio due to the limitations of the equipment.

Dolby Atmos

In 2012, a new generation cinema format was introduced - Dolby Atmos. This new technology turned out to be a breakthrough both for Dolby Labs itself and for the entire industry. Most AV equipment and speaker manufacturers have launched special lines of Atmos products designed to work with this format.

Dolby Atmos

Dolby Atmos is a surround sound technology that is based not on channels, but on audio objects. A cinema (or home) Dolby Atmos system distributes all sound sources according to their position specified by the sound engineer. Dolby Atmos can process up to 128 track objects simultaneously and distribute them across 64 output channels. The home version of Dolby Atmos can use up to 30 discrete channels, including ceiling channels.

The base layer of the phonogram (Bed Layer) is recorded in Dolby TrueHD. Sound objects are WAV files with metadata. The Atmos processor not only works with 7.1 audio decoding, but also simultaneously calculates objects, adding them to the base layer.

Dolby Atmos

The minimum configuration required to receive Atmos audio is a 5.1.2-channel system with two height channels. At the initial stages, Dolby Labs itself recommended setups from 7.1.2 and 7.1.4, but other more complex configurations with 32 or 34 channels are also possible.

Also read our materials about Dolby Atmos technology:

https://stereo.ru/to/k77s5-dekodiruem-dolby-atmos-doma-resheniya-ot-cirrus-logic-analog-devices-i

https://stereo.ru/to/itbpa-chto-takoe-dolby-atmos-ili-mnogokanalnyy-zvuk-bez-kanalov

What is Dolby Atmos on smartphones? Or talk about the wide stage with headphones

Last updated:2 months ago

Reader rating for this article: 4.9

(197)

People are divided into two types. The first, buying a more expensive and high-quality item, begin to enjoy it. The second is to suffer.

If you understand the former well, you're probably in luck. Unfortunately, I can only talk about the latter. For such people, the joy of buying a good thing is quickly replaced by the unbearable thought that there is an even better thing in nature.

After all, if the difference between $20 and $100 headphones was so impressive, then what will the sound be like in a $600 model?

However, the world is not so straightforward. Sometimes, in order to enjoy better things, you need to change yourself, to learn to pay attention to what you have never noticed before.

If you like your coffee with sugar, then by adding more and more sugar, at some point you will ruin the drink completely. And to develop your taste further, you need to switch from sweetness to something else - aroma, texture, aftertaste, etc.

The same goes for sound. If $20 headphones didn’t have any bass, and $100 headphones you feel the low frequencies even with your body, then increasing the budget another 10 times, you won’t get 10 times more bass. This is simply physically impossible - you are limited by the length of the basilar membrane of your own inner ear.

So you have to pay attention to other things and then you begin to understand that good sound is not only about bass, but also about detail, tonal balance and, of course, stage!

The scene is exactly what every person who is passionate about high-quality sound wants to hear in their headphones. So that the music is miraculously transferred from your head into the surrounding space, so that you get the impression that you are hearing a live performance on stage, and not plug your ears with ear pads and all the music rumbles only inside the auditory canal.

How to achieve this? Buy something like a $1000 Sennheiser HD800 and an expensive amplifier, and then try to hear the scene on the highest quality audio recordings?

I propose another option, more technologically advanced. And today we will talk about the stage and Dolby Atmos technology, available on many modern smartphones, which works with any headphones.

Believe me, the vast majority of those who have heard about this technology and even supposedly used it have not actually had such experience.

What is Dolby Atmos? Or why does an esthete need another sound “improver”?

If you read messages on forums or comments on popular resources, you will quickly find the answer to your question. It will sound something like this:

- Dolby Atmos is an equalizer program that boosts bass and treble to make cheap Chinese headphones sound more interesting.

- Dolby Atmos is a technology for cinemas that has nothing to do with music, especially high quality music!

- Dolby Atmos is a good technology that requires dozens of speakers to work. What smartphones and headphones are we talking about?

- Dolby Atmos is another sound “improvement” for those who do not understand the topic. It changes the original beyond recognition, turning it into some kind of mess of sounds and cheap 3D effects.

In a word, such a revolution in the field of sound... But, fortunately, all these answers have nothing to do with the correct one.

Yes, Dolby Atmos is all about surround sound. That is, a sound that you can feel in front or behind you, or you can even hear something right above your head. But is the whole point of Dolby Atmos technology (and its difference from 5.1 surround sound) precisely in the additional speakers placed on the ceiling?

Of course not. The main and fundamental difference between Dolby Atmos and everything that came before is object-oriented sound. When recording sound in Dolby Atmos, we have the same audio tracks (guitar, drums, vocals, etc.) as when recording regular stereo or 5.1 surround sound. But Dolby Atmos additionally introduces the concept of Atmos objects.

You can not only record a guitar, but also create an object in space associated with this sound, which has its own specific coordinates and a number of other properties. That is, in addition to audio tracks, any recording in the Dolby Atmos format contains so-called metadata - a set of objects and their description.

For example, if a vocalist walks across the stage, the audio file will contain not only a track with his voice, but also an associated object, the metadata of which will record the entire “route of movement” along the virtual stage.

Dolby Atmos is not 5.1 surround sound

This is the main difference between this technology. If in 5.1 surround sound all audio tracks are rigidly assigned to 5 channels (for example, such and such a sound should sound in the left front speaker, and such and such in the rear right), then in Dolby Atmos all audio objects are not tied to channels at all or columns

When playing music, a Dolby Atmos system builds a virtual stage based on how many speakers it has at its disposal. Since Dolby Atmos knows the real location of each sound object without reference to a channel or speaker (this is written in the music file), the system can try to recreate a virtual scene with any number of speakers.

If you connect 30 speakers to a Dolby Atmos device, then the system will use each of them to recreate the scene as realistically as possible. If you only have 5 speakers, Dolby Atmos will try to “visualize” all sound objects using this amount of acoustics, because the tracks are not tied to specific speakers and the system has the “freedom” to choose the optimal “location” of the sound.

This is the revolution in surround sound - a transition from the classical idea of channels (speakers) to the concept of objects that you can try to “visualize” on any available number of speakers.

Dolby Atmos on smartphones. Or what to do when there are not thirty “columns”, but only two

So, Dolby Atmos is high quality audio (for those who understand - up to a sampling rate of 192 kHz and 24-bit audio depth) with Atmos objects, the metadata of which clearly states the real location of each sound source in space.

The only problem is that we are talking about smartphones and headphones connected to them, often wireless. Why do we need all these Atmos objects if the sound will still be in stereo, like any other regular recording?

Before answering this question, I will immediately say that Dolby Atmos works excellently not only with wired, but also with wireless Bluetooth headphones . I will return to this point a little later, as it raises other questions.

Now think carefully, is it purely theoretically possible to make a person wearing headphones clearly hear a sound, say, a meter to his left or two meters behind him? And above your head? That is, the sound comes only from the left and right headphones, but the listener is sure that this is not so - he clearly hears it above his head.

If you are sure that this is impossible, then ask yourself one last question - how then do you hear all the sounds in the real world and feel them from all sides? After all, you don’t have thirty ears, but only two ears - a regular “stereo system”. No matter where the sound comes from, it will only end up in the left and right ears. And yet, with your eyes closed, you determine whether the sound is coming from above or from the right.

How do you manage to do this!?

Head casting shadow on ear

There is no spatial sound. No sound from above or below, no sound from right or left. There are only two ears that hear slightly different sounds depending on where it comes from.

Let's say someone clapped their hands to our right. Any person will determine that the sound is clearly heard on the right. Why? Because the brain made certain calculations and then played for our imagination a beautiful three-dimensional sound, heard as if from the right side.

For these calculations, our brain used mainly 3 variables:

Difference in time

When we clapped on the right, the sound from the clap first went to the right ear, and after a few milliseconds to the left. This difference is very important for accurate localization of sound in space.

Volume difference

The intensity (volume) of sound in the right ear will be much higher than in the left if the sound source is on the right. Thus, the brain compares not only the time delay, but also the difference in loudness.

Timbre distortion

Let's return to cotton. Despite its apparent simplicity, it is a very complex sound, consisting of a wide variety of frequencies. Any sound, even one specific note, contains many frequencies. If the note A is a frequency of 440 Hz, then depending on who or what is playing that note, the sound will contain a lot of additional frequencies (harmonics).

It is by these additional frequencies (harmonics) that we identify a specific instrument or the voice of a specific person.

If the sound comes from the right, then all frequencies enter the right ear and with the right ear we hear the most natural timbre. But then an obstacle appears in the path of sound - our head, which becomes like a sound barrier or filter. Some waves are simply extinguished by the head and no longer reach the left ear. We say that the head casts an acoustic shadow.

The brain takes into account all these and many other nuances, including data received from the eyes, and only then builds the correct sound stage.

But if it's so simple, why can't we trick the brain with headphones? Introduce the same natural sound distortion into one ear, make the sound louder or softer in different ears, and send the same signal to both headphones with a slight delay? In general, do everything to make the brain “draw” a three-dimensional scene?

In reality, for this we don’t even need to do anything with the sound, we just need to record it on this unusual microphone:

High quality studio microphones are hidden in each ear of this artificial head. All you have to do is install the head in a real studio. Sound will naturally hit one microphone before another, and the head will cast an acoustic shadow.

All the details are important here - even the shape of the auricle plays a very important role (it slightly changes the sounds coming from behind). Sound reflected from its curves and curls will reach each ear differently depending on the location of the source.

So we now have a left and right ear audio signal that we would hear if we were actually in a recording studio or on stage. All that remains is to send this signal to our real ears. To do this, it is enough to use ordinary headphones. They will play back the sound that has already been recorded, taking into account all the delays, timbre distortions and volume differences caused by the anatomical features of the head. And our brain will build a three-dimensional picture.

Such recordings exist and they are called binaural. You can put on any headphones right now, close your eyes, and turn on this classic binaural audio demo—the “virtual hair salon”:

Of course, the 3D effect will depend on the quality of the headphones and in more expensive models the scene will be more realistic. But you can notice the difference with stereo sound even in the cheapest headphones.

And now we come to the core of Dolby Atmos for smartphones!

Dolby Atmos, smartphone, headphones and head transfer function

So, Dolby Atmos on a smartphone does the following thing. The system receives a file that, in addition to audio tracks, stores metadata of Atmos objects in space. Now the system can recreate the scene on any number of columns.

But if we listen to Dolby Atmos on headphones (wired or Bluetooth), the system creates binaural sound.

For example, there is a guitar object in the file, which is located in front a little to your right, the location of the object is recorded in the Dolby Atmos format metadata. The same file also contains a guitar audio track.

If this guitar were actually located a meter away from you, a little to the right, how would the sound change as it entered your right and left ear? How would the shape of the head, torso, and posture of a person influence it? Which ear would this sound reach first and by how much?

All these questions can be answered with a certain accuracy using only mathematical calculations. That is, it is possible to calculate the movement of a sound wave taking into account distance, head shape, ear location and shape.

Actually, this is exactly what the Dolby Atmos algorithms do. They apply a head transfer function, or HRTF, to the sound of said guitar. It is this complex mathematical function that describes how sound changes as it travels from the source to each ear.

If we took a regular mp3 file or even an uncompressed studio-quality file, Dolby Atmos technology would not be able to do anything with it, since the file does not describe the location of all the instruments and the vocalist in space. We need a special format with metadata.

And when that data is available, Dolby Atmos can create a true 3D scene for headphone listening using HRTF and binaural audio. This is exactly what happens when you activate this technology on a compatible smartphone.

In other words, Dolby Atmos, using mathematics and the pre-known position of all instruments, adjusts each sound as it would change in reality if it were delivered to our ears, taking into account distance, shape of the head and ears, body, etc.

There are too many questions left... but too many answers!

It all sounds very convincing, doesn't it? But I'm sure some readers have questions, especially those who have "tried" Dolby Atmos and were completely unimpressed with this technology.

Well, let's look at the most popular of them, starting with the most unobvious:

Where did Dolby Atmos get the transfer function of my head?

Indeed, even the above example of binaural recording will feel different to each person, since the shape of the artificial head with ears used during recording may be very different from the shape of your head.

I'll say even more. If you were to change the shape of your ears, you would have serious problems localizing sounds in space for some time. It would take several weeks for the brain to make all the adjustments to its calculations related to the new shape of the ears.

Exactly the same thing happens with Dolby Atmos. The technology applies the HRTF function of the average head and ear to the audio signal, without taking into account the physiological characteristics of a particular listener.

Therefore, each person's perception of volume may be slightly different. This is especially true for children.

Why does Dolby Atmos feel like a cheap sound enhancer?

Most likely, you have tried lowering the curtain on your smartphone and turning on Dolby Atmos:

But we didn’t hear much of a wow effect. On the contrary, the sound could seem unnaturally processed, as if you were just playing with the equalizer.

This is what should have happened if you listened to music in regular formats (mp3, aac, etc.) or lossless formats. None of them use dynamic audio object metadata. Such a concept does not exist at all in the world of classical stereo sound.

To feel the effect, you need a file that contains this metadata. Dolby Digital Plus and Dolby TrueHD codecs are used . In all other cases, there can be no talk of any full-fledged Dolby Atmos. Dolby Atmos, let me remind you, is object-oriented sound and requires sound objects.

That's why you have a false impression about this technology - you just haven't heard of it.

Unfortunately, it's not enough to just have a file or an app that can deliver you a Dolby Atmos file. Dolby Digital Plus or Dolby TrueHD software decoder Therefore, if your smartphone does not support Dolby Atmos, no application will help here.

Netflix has had Dolby Atmos audio available for a long time, but there's not much difference!

If you watch Netflix on your smartphone with a Premium (Ultra HD) subscription, then you should actually have Dolby Atmos audio available. Should, but not available.

As of this writing, Netflix does not support Dolby Atmos audio on any smartphone, only HDR video (HDR10 or Dolby Vision).

Therefore, it is not yet possible to try Dolby Atmos on Netflix or other video streaming services.

Where can I try Dolby Atmos audio?

You can currently enjoy true Dolby Atmos only in three applications (streaming services): Amazon Music HD, TIDAL and Apple Music. The first two are not officially available in the CIS countries:

But the issue is easily resolved. You can legally use these services in any country, all you need to do is go to the service website and register an account using a VPN. All services accept cards from any bank.

Apple Music is the easiest way to try Dolby Atmos, and it even has an interesting audio guide on the technology.

You can test Dolby Atmos for free, since all services provide a trial period, after which you can unsubscribe.

I will even allow myself to recommend an album to start with - MicroSinfonias by Sergio Vallin (for example, the composition Donde Esta Mi Primavera):

Naturally, in the future, other streaming services will support Dolby Atmos, but for now this is the only way.

Cheap headphones + Dolby Atmos = expensive audiophile headphones?

Unfortunately, that's not how it works. Cheap headphones with Dolby Atmos will sound much worse than high-quality headphones with Dolby Atmos. However, the same as without Dolby Atmos.

As we have already figured out, the “scene” is built thanks to slight differences in the sound in the right and left headphones. But these inconsistencies must be caused solely by the sound source itself, that is, the headphones must not introduce any distortion into the signal .

In other words, if we feed the same signal to the right and left speakers, they should produce identical sound without the slightest difference. But in reality everything is much sadder. The lower the quality of the headphones, the greater this difference will be.

Both speakers of cheap headphones may have different frequency responses. For example, the right speaker plays a sound at a frequency of 100 Hz slightly louder than the left one. And this is only one frequency, and there are only about 20 thousand of them! That is, we already have about 20 thousand chances to worsen the “scene”, since the headphones will distort the signal due to their design features and low quality.

Look at the frequency response of the right and left speakers of Apple AirPods Pro wireless headphones:

We see that the left speaker (blue line) reproduces some frequencies a little quieter than the right one (gray line), and some noticeably louder. This will already affect the scene.

And here is a graph of the much more expensive Sennheiser Momentum True Wireless:

We see an almost complete coincidence of the two graphs with a slight discrepancy at the end. These headphones, all other things being equal, would be able to “draw” the scene more accurately, especially with Dolby Atmos technology, when it is necessary to convey the smallest differences in the sound signal.

In addition, there is another important parameter that affects the “scene” - the phase mismatch of the speakers. We won't go too deep into this topic now, but just take a look at the graph of the typical inexpensive Sound Intone CX-05 headphones, which many bloggers have rated as "best for the money":

This graph shows the phase response of the left (blue) and right (red) headphones. Ideally, these lines should match perfectly, meaning the phase response of both speakers should be identical and not delayed or rushed.

For comparison, look at the same characteristics of the Samsung Galaxy Buds Pro headphones (our review), which I mainly use for listening to music in Dolby Atmos (necessarily with the Scalable codec):

I think there is no need to explain which of these two models will “draw” the scene more realistically.

Therefore, unfortunately, Dolby Atmos technology will not be able to turn cheap headphones into audiophile ones. Yes, some volume will appear, but the difference with higher quality headphones will be simply stunning.

How will Dolby Atmos be transmitted over Bluetooth? Do I need a special high bitrate codec?

Support for a special codec is needed at the application and smartphone level in order to unpack the file and get information about each track and all audio objects from there.

Then the Dolby Atmos system on the smartphone applies the HRTF function to the sound, that is, it slightly changes the signal for the left and right headphones, and at the end the smartphone uses any codec supported by the headphones (SBC, AAC, atpX, and so on).

That is, all the “magic” happens with the sound before sending two tracks (for the left and right speakers) to the headphones. Therefore, Dolby Atmos sound requires a higher bitrate during transmission from the streaming service to the smartphone.

And after processing, a higher bitrate is not required, because we have exactly the same two audio tracks as when listening to mp3, it’s just that the tracks themselves are slightly changed (not distorted, as “enhancers” do, but changed taking into account binaural sound) .

What if you listen to music without headphones?

If you place a smartphone with stereo speakers directly in front of you, about half a meter away, close your eyes and turn on the composition in Dolby Atmos, you will not be able to determine where exactly the smartphone is. Sounds will come from literally everywhere.

I recommend starting your Dolby Atmos test without headphones with the song Final View From The Rooftops by London Elektricity:

Of course, the sound quality will be incomparably better with headphones, but the sense of volume and stage will be excellent even on smartphone speakers.

Here it is no longer possible to get by with just the HRTF function and binaural sound, because the speakers are no longer isolated from each other, as is the case with headphones. The sound from each speaker will hit both ears at once, completely ruining the 3D scene.

Therefore, another algorithm called Crosstalk Cancelation is needed to suppress such crosstalk. As a result, we get transaural stereo sound, in which each ear hears only its own channel (one of the speakers).

That's exactly what the Dolby Atmos decoder does when you play related content through your compatible smartphone's speakers. All this is so unusual that upon first listening, the brain refuses to believe what is happening.

Instead of conclusions

In this article I wanted to talk not only about Dolby Atmos technology, but also about how the scene generally appears when listening to music on headphones. I hope the article brought a little clarity to this issue and, perhaps, made you look at familiar things from a slightly different angle.

I also want to emphasize once again that not all headphones are “equally useful.” The difference between expensive and cheap models goes far beyond the amount of bass. Although, unfortunately, this particular criterion is one of the main ones when discussing and choosing headphones.

Alexey , head. Deep-Review editor

PS

Don't forget to subscribe to our popular science website about mobile technologies in Telegram so you don't miss the most interesting things! If you liked this article, join us on Patreon for more fun!

How would you rate this article?

Click on the star to rate it

There are comments at the bottom of the page...

Write your opinion there for all readers to see!

If you only want to give a rating, please indicate what exactly is wrong?